- PIM Sparse mode

- Traffic not flooded unless asked for it.

- Uses RP as root of Shared Tree

- PIM DR hears sender and reports (S,G) to RP through PIM Register

- Last hop router hears IGMP Join and sends (*,G) PIM join towards RP.

- RP sends (S,G) PIM join towards source to complete shared tree.

- Last hop router can initiate PIM SPT Join and Shared Tree Prune once feed is end-to-end.

- Without RP

- Sources cannot register

- Joins cannot be processed

- All routers must agree on same RP address on a per-group basis

- Registers and Joins are rejected for invalid RP.

- RP address assignment.

- Can be done statically or Dynamically.

- Dynamically

Auto RP:

- Cisco properietary

- Two functional roles

- Candidate RP

- Devices willing to be the RP

- Mapping Agent

- Chooses the RP among candidates and relays this info to the rest of PIM domain.

- Allows for redundancy of RPs

Auto RP Process:

- Candidate RP sends announcement with group range they are willing to service.

- Uses group (S, 224.0.1.39)

- Mapping agent then discovers candidate RP and advertises their mappings to all other routers

- Joins (*, 224.0.1.39) to discover about Candidate RPs

- Announces final RP advertisement with (S, 224.0.1.40)

- Caveats:

- Dynamically learned RP mapping preferred over static.

- Auto-RP control plane messages are subject to RPF check.

- Routers must join (*, 224.0.1.39) for Candidate RP and (*, 224.0.1.40) for mapping agent.

- Pim Sparse Mode

- Cannot join the Auto-RP groups without knowing where the RP is located.

- Cannot know where the RP is without joining the Auto-RP groups.

- Recursive Logic.

- Solutions:

- Default RP Assignment

- Assign static RP for groups for 224.0.1.39 and .40.

- Defeats purpose of dynamic.

- PIM Sparse-Dense Mode

- Dense for groups without an RP

- Sparse for all others.

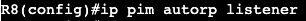

- Auto-RP Listener feature

- Dense for 224.0.1.39 and .40 only

- Sparse for others.

- Auto-RP with multiple candidates.

- For redundancy and load distribution, Candidate RPs can be configured.

- ACL applied on Candidate RP controls what groups they service.

- If multiple overlapping Canddiate RPs, Mapping Agent chooses highest RP address.

- Mapping Agent Security

- Needs to be protected against false RP Candidate RP advertisements.

- RP Announce Filter feature can permit or deny Candidate RP to be accepted.

Auto-RP Configuration:

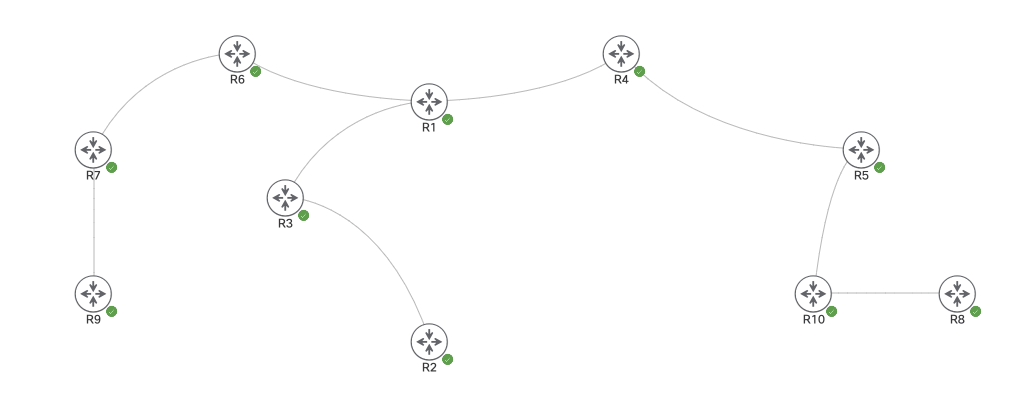

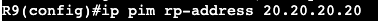

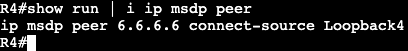

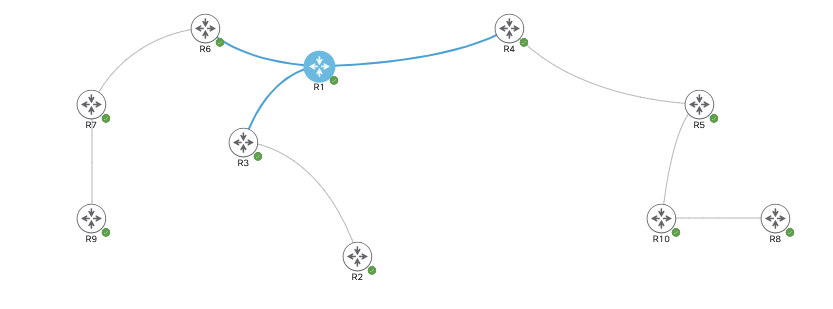

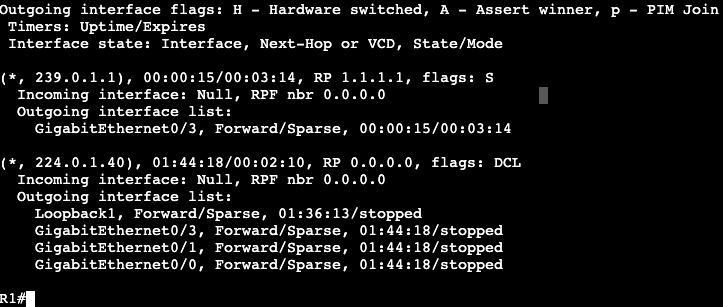

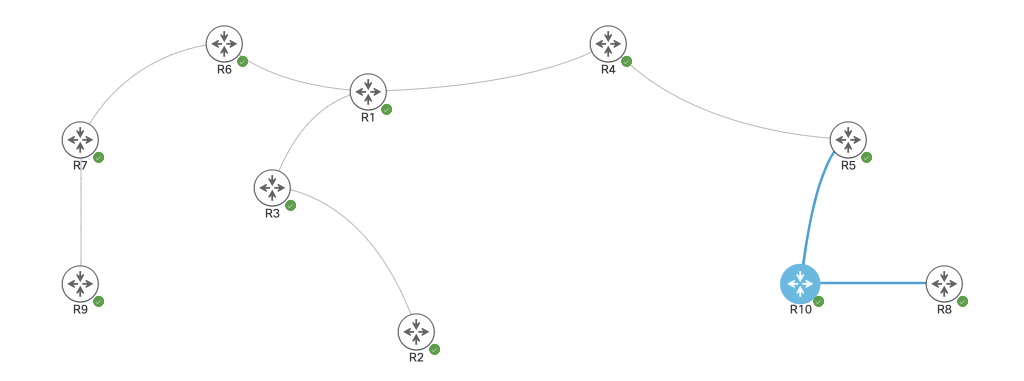

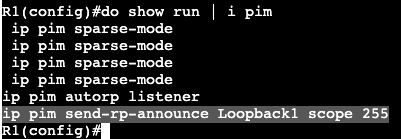

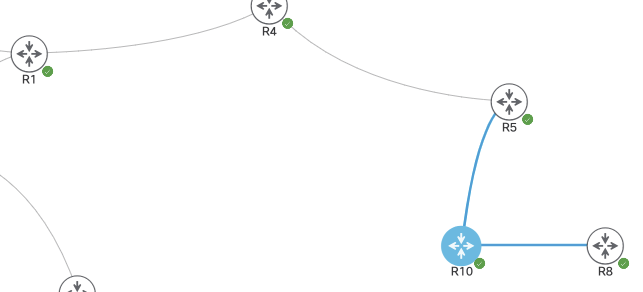

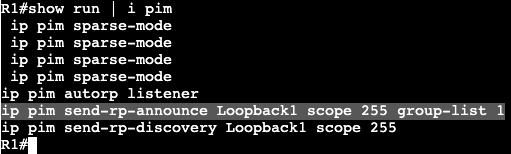

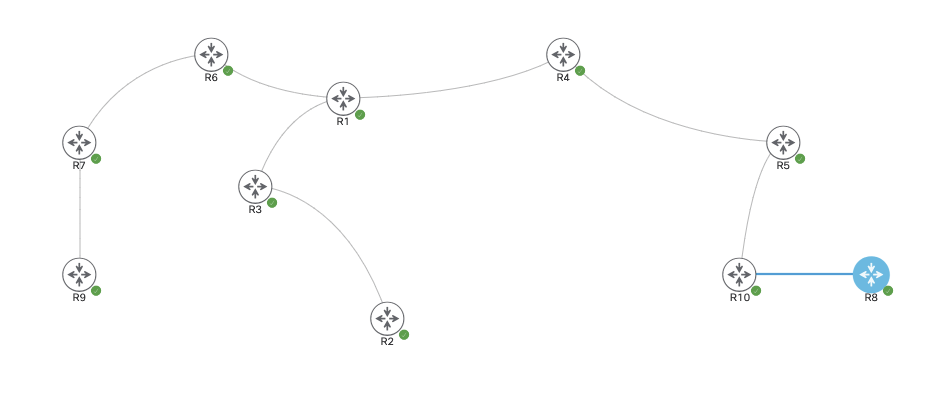

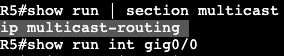

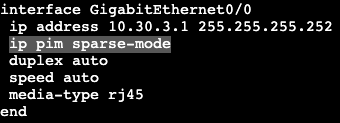

In the above topology we’re running OSPF on all routers in Area 0. They’re each advertising a loopback address into the domain and each have Multicast enabled. There is however zero RP at the moment. To enable Auto-RP we’ll first add the autorp listener command to each device.

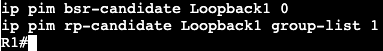

In this environment the router R1 will become the RP. On R1 we’ll first need to allow the router to send RP announcements. We’ll be sending these announcements out via interface Loopback1 and the scope will be a TTL of 255.

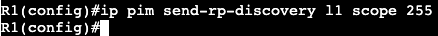

And for the mapping agent we’ll need to add in the RP discovery for the mapping agent. Again the interface used will be interface Loopback1 and the TTL of 255

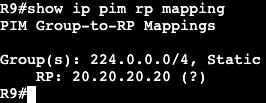

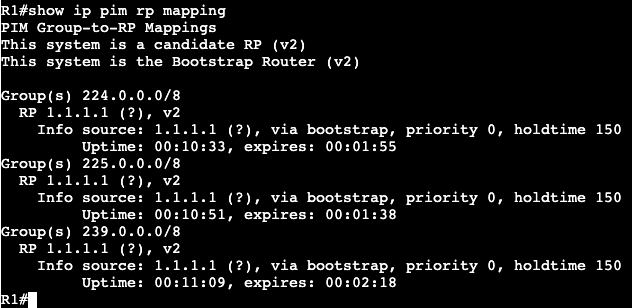

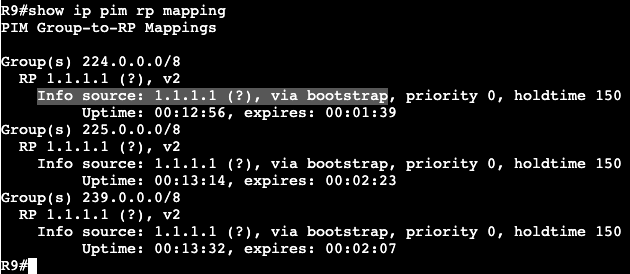

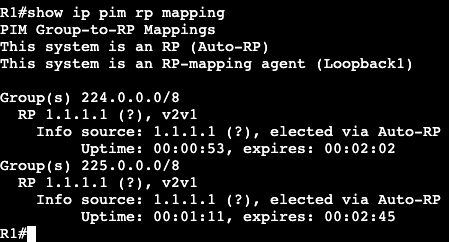

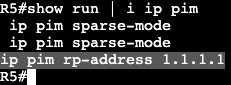

Shortly after once doing an ‘show ip pim rp mapping’ you can see that this host is now the Auto RP and mapping agent.

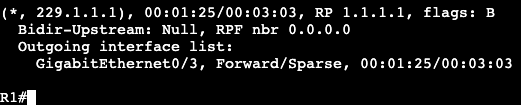

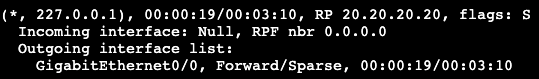

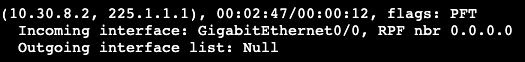

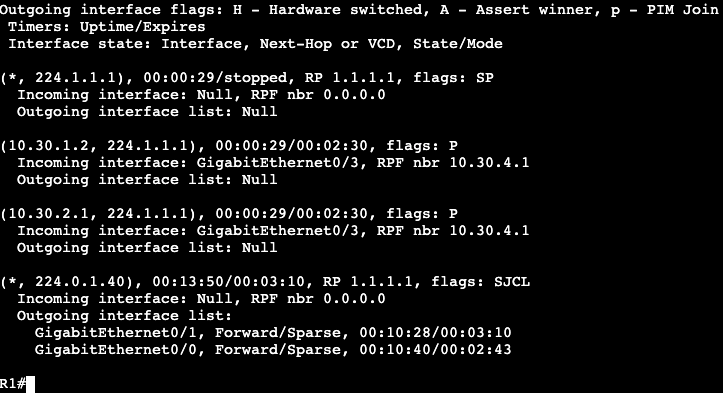

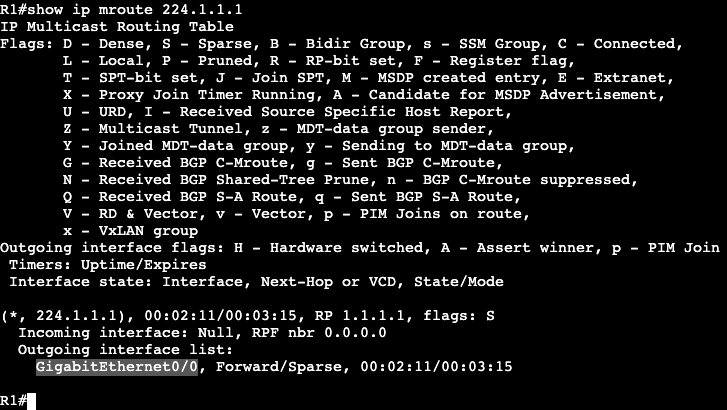

Now, if we head over to R9 and ping the group 225.1.1.1, the RP at R1 will have an (S, G) in its mroute table for 225.1.1.1 with the router I just pinged from.

The incoming interface is the unicast outbound interface to reach R9, G0/0, and the outgoing interface list is Null. Null because there is currently no destination or group member.

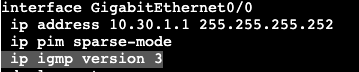

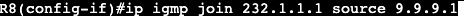

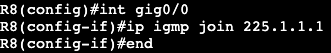

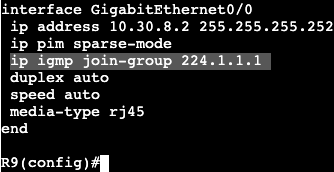

To add a group member I will go over to R8, go under the active interface towards the RP, and add an IGMP join message to our newly created Multicast Group.

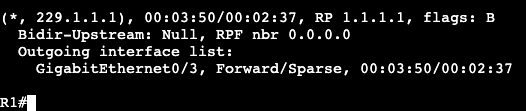

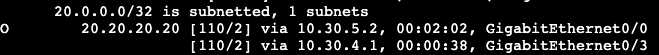

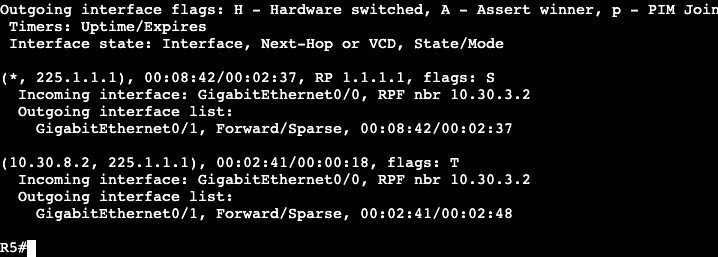

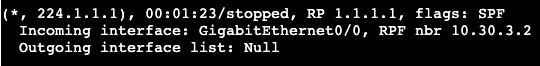

Now the mroute on R10 will show the succeeded RPF check and it will know the path for IGMP group 225.1.1.1.

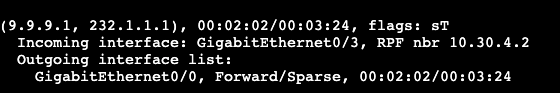

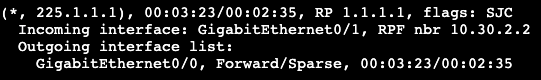

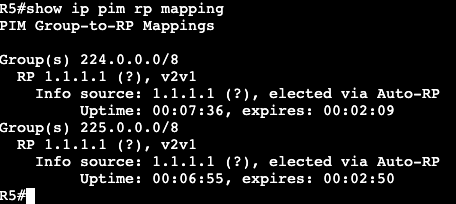

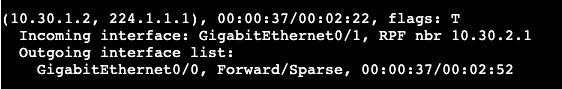

Again from R9 we’ll try pinging the multicast group 225.1.1.1. After we do this, now there’s a group member, there will be a successful ping response. In addition the ‘show ip mroute’ will show the full (S, G) for everything in the path. Here’s R5:

Auto-RP Security:

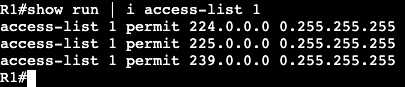

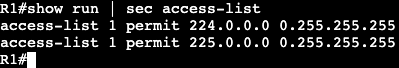

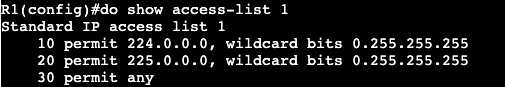

Currently on R1 we can see with the command ‘show ip pim rp mapping’ that we’re servicing the group 224.0.0.0/4. So basically everything. If we wanted to add an access-list to this we could do the follwowing:

Create an ACL:

Add ACL to the pim RP mapping:

After running a ‘clear ip rp-mapping’ the ‘show ip rp mapping’ shows the serviced group has decreased to meet our ACL.

Same with another router outside of the RP

This essentially means if any router on the network tries to join a group that does not fall under 224.0.0.0/8, or 225.0.0.0/8, the network will fail to know what it should do.

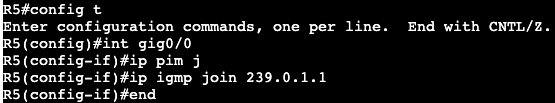

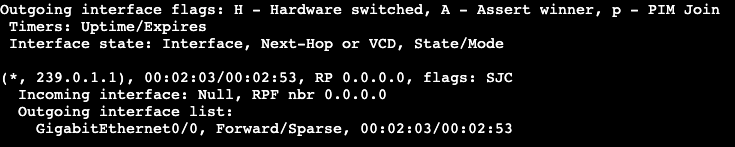

In the image above, after the ACL add, I’ll try to have R5 join group 239.0.1.1.

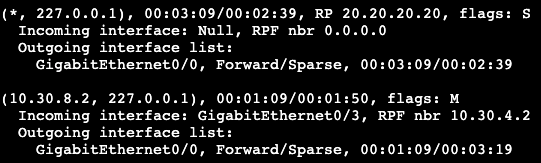

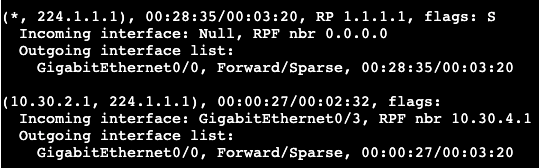

On R4 now, checking mroute for 239.0.1.1, it shows the incoming interface is Null. The reason for this is because the router does not know what Rendezvous Point is used for the new group.

After adding the permit any to access-list 1, then pinging the group 239.0.1.1, I can now see in R4 that it’s building the full SPT.