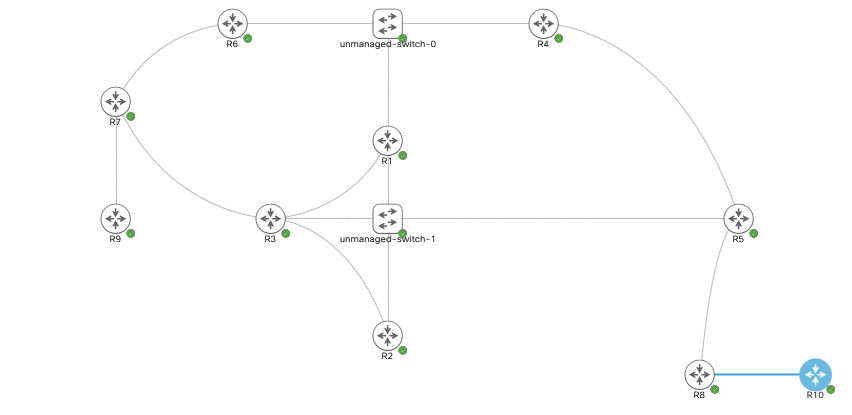

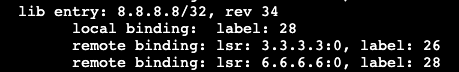

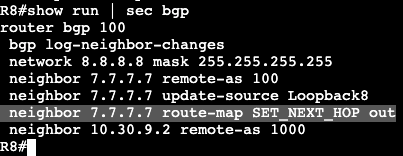

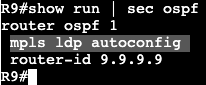

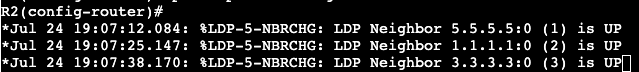

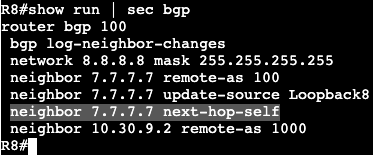

In the image below, we’re running MPLS L3VPN with BGP on both the provider and customer ends – ie. PE/P and CE.

ASN List:

R9/R7 – CE, ASN 1000

R10 – CE, ASN 1000

All other devices – Running ASN 100.

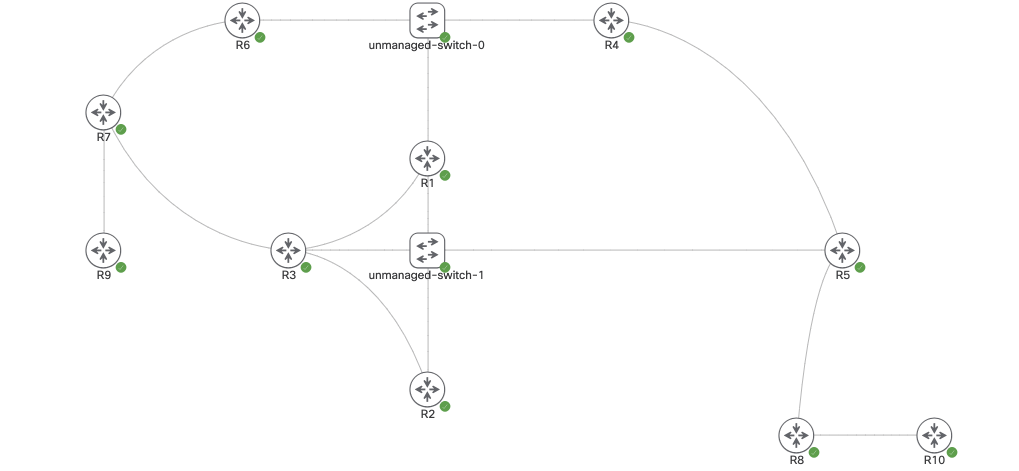

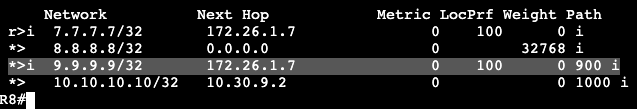

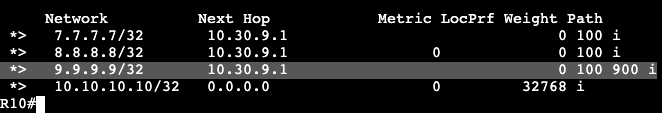

In this scenario the customer wants to run the same ASN on both remote ends of the MPLS L3 VPN. R10 is exchanging it’s loopback 10.10.10.10/32 across the VPN to R7 and R8, but unfortunately it’s not showing in the remote end’s routing tables.

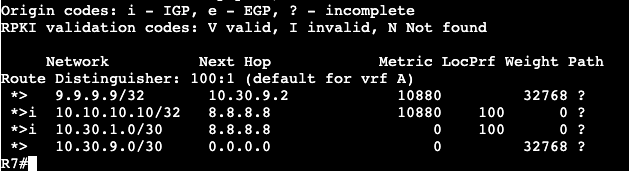

The route 10.10.10.10/32 looks to be advertised or received all the way up to R7, where it does not show. The reason for this is due to BGP’s built in loop prevention mechanisms. If the same ASN is found in a route that’s the local ASN then the route is dropped.

AS-Override

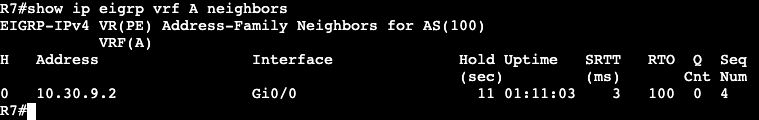

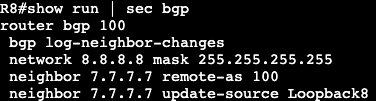

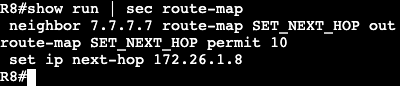

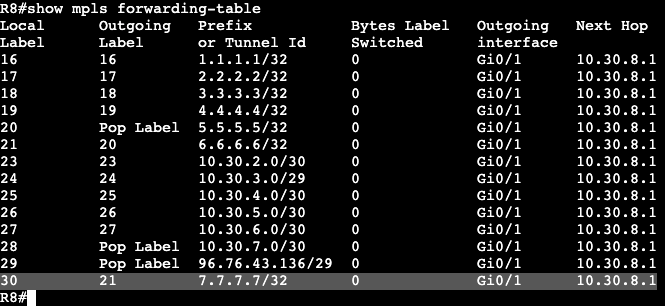

AS-Override is the first option to fix this. The configuration is advertised outbound on R6 to R7, which will change from R7’s perspective what ASN the route 10.10.10.10 is coming from.

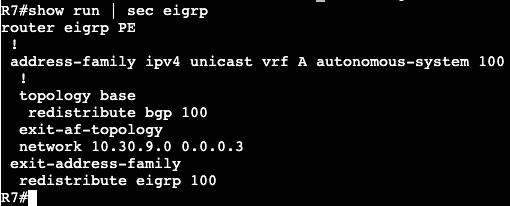

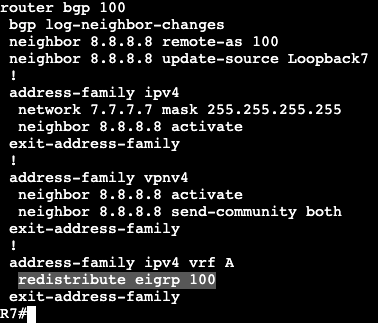

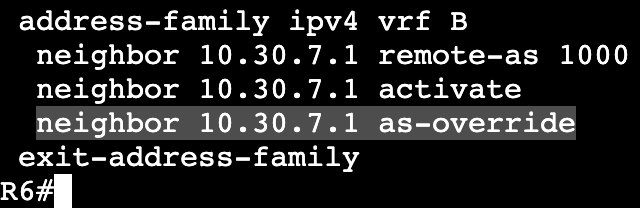

Under R6’s VRF Address family we add to the neighbor command.

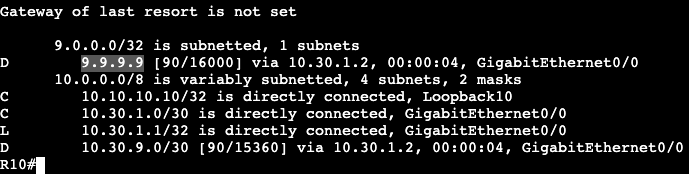

Now on R7 we see that R10’s loopback is successfully making it to the routing table via R6.

AS-Override in general is creating another problem though in that it removes the original ASN from the path the route is advertised from. This is breaking the built in loop prevention for BGP. When AS-Override is used it allows for the route coming from R10 to get advertised back into ASN 1000 on R10’s side, creating a loop. A method of fixing this is a with a route tag called Site of Origin (SoO).

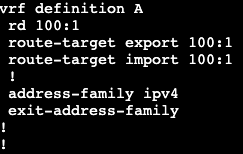

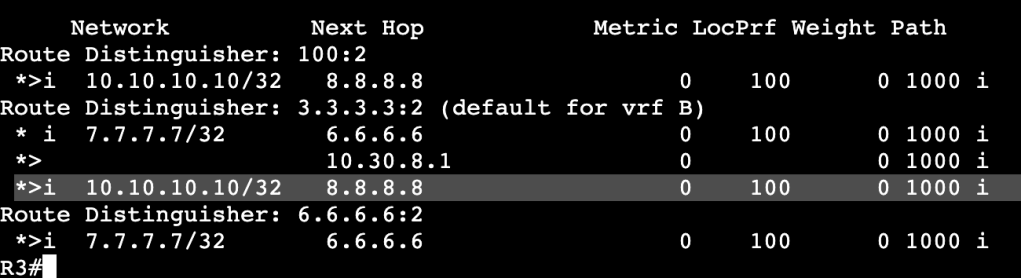

Site of origin is an extended community that throws a tag on routes routes as they’re advertised outbound. In this situation it will allow R6 and R3 to compare tags and if they match, the two routers know they have the same route and no need to advertise back to each other.

The configuration is straightforward. On R6 and R7 we’ll configure the following:

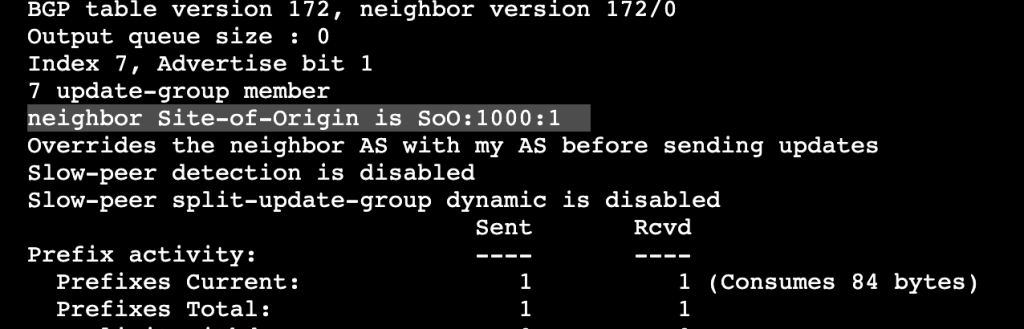

Under AF we’re specifying the soo tag (extended community) that will match on both R6 and R3. Since they match they’re aware the route does not need to be advertised to each other which would create a loop. The SoO can be seen under ‘show ip bgp vpnv4 all neighbor <neighbor address>‘